TruthScan Detects North Korean Deepfake Attack on Defense Officials

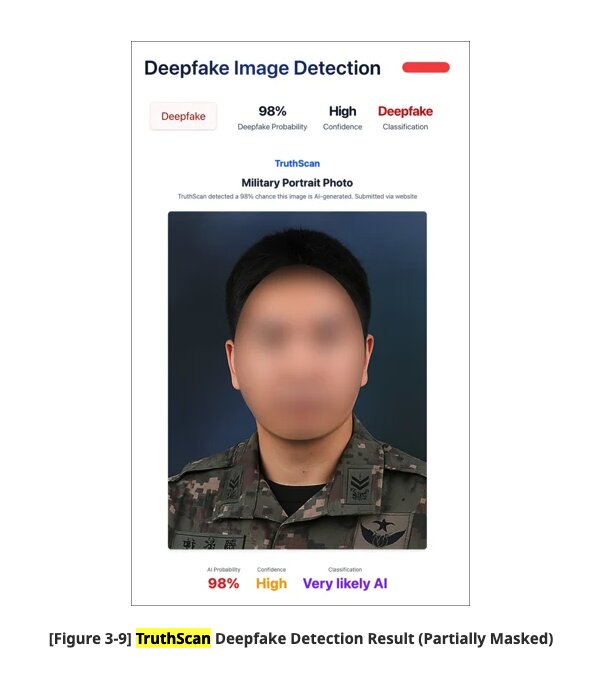

Researchers from the Genians Security Center used TruthScan's deepfake image detector to analyze a fraudulent government employee ID card that was used by a North Korean-backed hacking group known as Kimsuky APT.

The hackers created the deepfake ID to use in a spear-phishing campaign targeting South Korean defense officials last July. According to the Genians Security Center, TruthScan's AI-image analysis was 98% accurate.

Per statistics from the FBI, deepfake fraud is responsible for $200 million in losses in 2025, and other industry reports indicate that deepfake fraud schemes have increased by 2,137% over the last three years.

"The case analyzed by Genians shows just how much deepfakes are being weaponized in sophisticated cyberattacks," said Christian Perry, CEO of TruthScan. "We need to be treating high-risk AI technology the same way we treat weapons. We see deepfake detection being as relevant in cyberspace as metal detectors are in real life."

TruthScan's deepfake detection suite analyzes image patterns, pixel structures, and data to identify AI-generated and deepfake content. Currently, the platform offers its services to enterprise clients like financial institutions, dating applications, government agencies, and newsrooms that need to verify content authenticity at scale.

Disclaimer

The information contained within this press release is reported as is, but is believed to factually accurate. This is not investment advice. This press release was published by the listed company and not a third party.

About

TruthScan is an AI detection-based cybersecurity tool that identifies deepfake media such as AI-generated audio, images, video, and text.

Media

Devan Leos (CCO)

[email protected]

Reniel Anca (PR contact)

[email protected]

Information contained on this page is provided by an independent third-party content provider. Frankly and this Site make no warranties or representations in connection therewith. If you are affiliated with this page and would like it removed please contact [email protected]